Posts

-

Women in Policing: Health, Justice, and Organisational Culture — A Wee Chat with Dr Dr Mahnoz Illias

Dr Dr Mahnoz Illias completed her PhD at the University of Glasgow in 2025. Aye, she really is a double doctor 😎, originally earning a medical degree in Bangladesh, before completing an MSc in Global Mental Health at the University of Glasgow, supported by the Commonwealth Scholarship Commission. Her PhD research examined policing as a gendered organisation and the impact on the health and well-being of female police staff and officers in the UK. Here, I had a wee chat with her about her research and perspectives.

[Read more] -

Invited talk at CODAI, HiPEAC 2026

I was delighted to be invited to both give a talk, and participate in the panel discussion at the CODAI workshop (Workshop on Compilers, Deployment, and Tooling for AI) at HiPEAC 2026 (Kraków, Poland).

My talk, titled “The Compiler Before the Horse: Design Space Exploration at Fractile,” focused on how investing in compiler development early in our hardware design process has been essential for effective design space exploration and for making well-informed architectural decisions.

[Read more] -

You Can Do It Too: Inside Scotland's Post-Punk Press with Glenn Gibson

In this post, I chat with a former New Musical Express (NME) writer Glenn Gibson (aka, my da) about his experiences breaking into music journalism in the 1970s-80s, the evolution of music publications, the development of Scotland’s music scene during the punk and post-punk era, and various other bits and pieces.

[Read more] -

Invited talk at Alces Flight 2025

I was honoured to be invited to give a talk at the Alces Flight 2025 conference, which took place in London, UK, in October 2025.

My talk discussed how interdisciplinary collaboration and playfulness can be powerful tools, especially for early-career researchers and engineers.

I shared some of my experiences from my PhD and industry work.

-

How We Survived Mac and Even Laughed

At work, we recently transitioned everyone to MacBooks. I’ve never used one before, and had spent the past decade on Linux laptops which I’ve gradually refined to my liking.

Here’s an inconvenient truth — these machines are not designed to meet my needs. Being able to modify them to meet those needs has been a challenge. In an attempt to not alienate my readers, this doesn’t mean that folk with other needs are wrong. Apple clearly has a particular set of users in mind; I’m just not one of them.

My philosophy is that the tools I use should behave the way I tell them to. This post describes some of the issues I’ve encountered trying to achieve that, and how I’ve worked around them. Overall, I’ve been able to get a workable setup.

[Read more] -

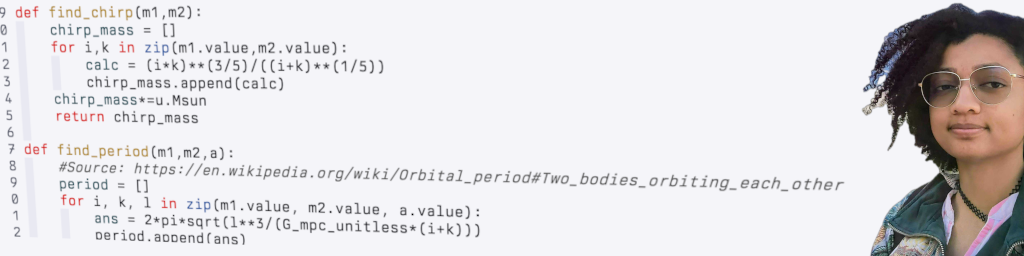

Show Me Your Code: Binary White Dwarfs & Melize Ferrus

I’ve been chatting recently with Melize Ferrus, currently working on PhD in physics and studying various aspects of gravitational waves.

It’s not an area I have much expertise in, but through a series of chats and playing around with some of code (and making some fun demos), I thought I’d discuss what I’ve learned. This is not a deep dive into the physics, but rather an exercise in cross-discipline communication, and a chance to play with some Python code. I wrap up with a Q&A to hear take on physics and collaboration.

[Read more] -

Simple Workflow Tweaks for Scientific Python Projects

I’ve recently been chatting with a couple of mates who are doing scientific research using Python. Answering specific code questions is a lot of fun, as I try to figure out enough of their domain to see if/how I can help.

However, there are some general workflow improvements that I think both they and other scientific Python programmers could benefit from. In this post, I’ll share some lightweight workflow improvements that can help make your code better.

[Read more] -

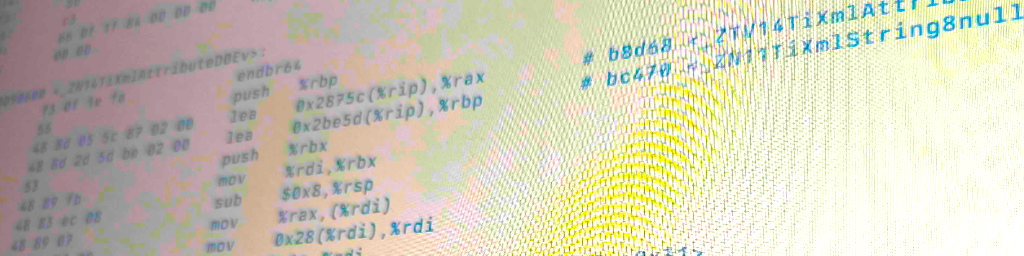

Calling Clang's Assembler from C++

LLVM is a collection of modular and reusable compiler and toolchain technologies. It provides a set of libraries and tools for building compilers, assemblers, linkers, and other related tools.

In a project I was working on, we were using the

clangpart of this project to compile C and LLVM IR code. The code path for these two sources was similar enough that we could have a singlecompilefunction that passed all the configuration flags for our usecase.However, for a new feature, I was generating assembly files directly and wanted to assemble them into an object file. This post briefly explains how I got this working and how you can use the

[Read more]clanglibraries to assemble assembly files in C++. -

Open Source: Petit Pois, a Podcast Archiver and Feed Generator

I’ve just released a new open-source project called Petit Pois, available on my GitHub, written in Python.

It’s a podcast archive tool and feed generator that allows you to archive podcast episodes and generate custom feeds for them. The project is designed to be simple and easy to use, with a focus on flexibility and customisation. I have a sketch of a workflow for how we can generate private token URLs, and integrate it with a web server such as Nginx to serve the files.

Most podcasts are shite, but it’s still important that we have archival tools available, since some of them may be important to preserve for future generations.

The main goal is for personal archival, preservation, and research use only, as redistributing or republishing podcast episodes without permission may be illegal. Big ol’ disclaimer: I encourage users to respect the rights of content creators and follow copyright laws.

-

C4ML Talk: Rapid Compiler Prototyping using MLIR Python Bindings: A Case Study from Fractile

I was chuffed to present at the 6th C4ML workshop (Compilers for Machine Learning), at CGO 2025 in Las Vegas, NV, USA.

There, I presented “Rapid Compiler Prototyping using MLIR Python Bindings: A Case Study from Fractile”.

This work was a case study on how we were able to leverage the MLIR Python bindings to quickly develop and iterate upon our initial compiler prototypes — all the way to compiling and correctly executing a realistic workload on our functional simulator.

Now we’ve moved further in our development process, but these early stages wouldn’t have been as smooth without the tools and support of the MLIR and LLVM community.

Vegas was interesting. I’m glad a city like that exists, but I’m also glad that not every city is like that. It feels like everything is pretending to be something else.

-

Cheesing the Railcard System for Fun and Discounts

I recently applied for a Railcard for my partner who was visiting the UK. They were eligible for a 25-30 Railcard, which offers a discount on train journeys. However, they are from Belgium, which I found represented an edge case in the sign-up form, making it appear impossible to apply for the Railcard.

This is the story of how I cheesed the system, and was able to get the discount for them despite this.

[Read more] -

WeeNet Manifesto

Models of the world, unite; you have nothing to lose but your priors!

This post serves as a manifesto for the term “WeeNet,” which I’ve been using for the past few years. Conceptually it’s nothing new (either in machine learning or general software development), but I believe that the term is a useful one to describe a particular way of thinking about machine learning (ML) systems.

[Read more] -

New Role: Fractile

Pleased to say I’ve just started a new role at Fractile, an AI hardware startup, working on data-centre tier in-memory AI accelerators.

I’ll be bringing my across-stack ML systems perspective as a member of technical staff, with a focus on compilers, and contributing to our wider design space exploration activities.

-

DNN64: An ML Compiler Toolchain for the Nintendo 64 (Part 4) --- The Co-Processor

This post is the fourth in my DNN64 series, where I discuss my project of compiling and accelerating deep neural networks (DNNs) on the Nintendo 64 system using modern tools and techniques. My first post is available here, and the goal is to use modern tools and techniques on this constrained platform. This post discusses the N64’s co-processor, the RSP, which I am using to accelerate my DNN computations.

[Read more] -

DNN64: Invited Talk

I was delighted to give an invited talk at my alma mater (University of Glasgow) on my DNN64 project, where I have been building an accelerated DNN compiler for the retro N64 console (using modern tools and techniques!)

-

DNN64: An ML Compiler Toolchain for the Nintendo 64 (Part 3) --- Activation Maps

This post is the third in my DNN64 series, where I discuss my project of compiling and accelerating deep neural networks (DNNs) on the Nintendo 64 system. My first post is available here, and the goal is to use modern tools and techniques on this constrained platform. This post builds upon the challenges of limited memory discussed in the previous post, with this post looking at the memory requirements of the activation maps.

[Read more] -

DNN64: An ML Compiler Toolchain for the Nintendo 64 (Part 2) --- Weighty Matters

This post is the second in my DNN64 series, where I discuss my project of compiling and accelerating deep neural networks (DNNs) on the Nintendo 64 system. My first post is available here. This post will talk about some of the challenges we face regarding the limited memory of the console compared to the high memory requirements of DNNs, and changes we need to make to our code generator to increase our efficiency and thus increase the size of the models we can run. In particular, we’ll look at this from the perspective of the DNN weights (also known as parameters).

[Read more] -

DNN64: An ML Compiler Toolchain for the Nintendo 64 (Part 1)

In the first of a series of blogposts, I discuss my experience developing and accelerating a compiler toolchain for running deep neural networks on the Nintendo 64 (1996). I endeavoured to use modern tools (e.g., Apache TVM), and acceleration techniques which are relevant to today’s models and hardware accelerators. But of course there will be special considerations required to fit them to the unique hardware and software environment of this beloved (by some, it was out before I was born) games console. This first post will give an overview of my system design, and future posts will go deeper into individual challenges (in ML, compilers, and hardware).

[Read more] -

Press: HiPEAC Info 71

I’m pleased to say that I have been featured in issue 71 of the HiPEAC Info magazine, available here from the HiPEAC website. You can find it on pages 48-49. During the interview with a HiPEAC team member, I had the opportunity to discuss my PhD journey, share some of the techniques I developed, and offer insights that could be beneficial to current and prospective PhD candidates.

-

How to Instantly Open Files at Specific Positions in KDE Konsole

I often use KDE Konsole for running terminal commands, but sometimes I’m using a tool (e.g., a compiler) which outputs a file path, as well as a line number, which I may want to open in my text editor. E.g.,

2 errors generated. In file included from /home/proj/lib/AsmParser/Parser.cpp:13: /home/proj/include/mlir/IR/MLIRContext.h:253:18: error: use of undeclared identifier 'Operation'; did you mean 'operator'?Wouldn’t it be handy if we could just click on/select the file in the terminal output, and open it in our text editor in the right place? This would reduce friction when debugging, potentially increasing productivity.

[Read more] -

Step-by-step Guide to Adding a New Dialect in MLIR

For one of my projects, I needed to add a new dialect to the main MLIR tree. However, following the information available, I encountered some issues. I made a “clean” example dialect, which I was able to add correctly. This post discusses how this is achieved, and links to some code.

[Read more] -

Retro-AI Christmas Fireplace

I’m sure many folk are sick of hyper-realistic AI generated images

Let’s take it back, way back, antique style, 2021

In between peeling tatties with my family for Christmas, I dusted off my old notebooks to produce this VQGAN+CLIP AI Fireplace

Warm yourself on the latents: https://youtu.be/pZvYmcRA6U0

This uses the same stack that big Sean Cosgrove and I used for the “Bloodshot” music video, as seen in DJ Magazine and this blogpost.

Happy holidays to aw yoos.

-

Google Project Management: Professional Certificate

In this post, I am pleased to announce that I have received a certificate for completing the Google Project Management course, comprised of six modules over six months. Although I have gained significant experienced in managing projects during my time at gicLAB, as well as my other professional endeavours, I felt that re-familiarising myself with the terminology and best practices of the field would serve me well. You can find my certificate of completion here.

[Read more] -

PhD Completed!

Pleased to broadcast that I have completed my PhD at University of Glasgow!

My thesis title was “Compiler-centric Across-stack Deep Learning Acceleration”. In plain English, this means that neural networks (aka “AI”) are expensive, and to make them scale, we need to collaborate across machine learning, software, and hardware domains. My opinion is that compilers will be an increasingly important piece of this co-design challenge.

Many thanks to Dr José Cano Reyes for his tireless mentorship and support, which has helped shape me into the researcher and engineer I am today, even through a global pandemic. We logged over 470 hours of one-on-one meetings — I challenge you to find someone more dedicated to his students.

Additional thanks go out to my friends and colleagues at gicLAB, University of Glasgow, and beyond. Not to mention my ever supportive family, pals, lovers, and enemies.

Interested in advancing deep learning acceleration or compilers? Let’s connect!

Dr. P, signing off. 🫡✌️

-

Open Source: signal-compress, semi-secure LLM compression of Signal chats

This project extracts messages from the Signal messenger app, and runs an LLM (large language model) to try and summarise what happened. This can be handy for extensive chats, and archival purposes, and is intended to be run locally to preserve privacy.

Signal is designed to be privacy-centric, and several other chat apps implement the protocol such as WhatsApp, and Facebook’s and Skype’s “secret-mode” conversations. Therefore, I was keen to minimise how much I compromised this security model.

This project uses Docker Compose, to make managing dependencies easier, since Signal encrypts its database using a particular encoding that requires some tool setup to access. Docker Compose also makes it slightly easier to control things like file and network access. The system runs the LLM model locally, in an attempt to preserve the privacy of your messages, compared to sending them to a third party like OpenAI. This uses the

llama.cppproject. I’ve open sourced my code at https://github.com/Wheest/signal-compress.See below for more technical details and design rationale.

[Read more] -

Thesis Analysis: Gender Ratios

I recently submitted my PhD thesis, in which I cited 1741 authors (1392 unique authors) over 319 papers. I was interested in the composition of my bibliography; therefore I’ve cobbled together some code to analyse it. The first analysis I have run estimates the gender ratio of the authors I cite. The most reliable estimate of my gender ratio is 11.6-to-1 for men-to-women. I discuss my methodology more below.

[Read more] -

Open Source: Webcam Timelapse with Human Detection

30 days of thesis writing (64% of my active working hours!), and version 1.0 is ready!

I catalogued this journey with a timelapse, produced using a tool I developed called

desk-cheese.See more info by expanding this post.

[Read more] -

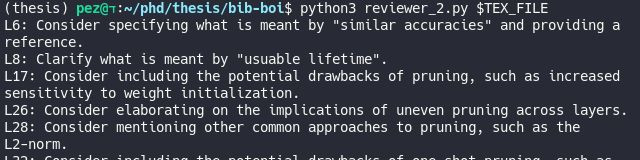

Open Source: reviewer_2.py AI writing assistant

Getting close to version 1.0 of my PhD thesis, so I’ll soon be roping in colleagues and pals to give some feedback.

But first, I’ve written a script to give the first round of feedback - automatically.

reviewer_2.pyis an LLM-based LaTeX document review system (i.e., proofreading “AI”, using gpt3.5-turbo). It provides feedback on your writing style and quality, and has some (sketchy) domain knowledge thanks to being trained on the web.Take its output with a pinch of salt (as you should with any human proofreader), and definitely don’t use it to write your papers. However, I’ve found it to be super-handy in catching issues that my spelling and grammar checkers have not.

I’ve open sourced the code here, hopefully I’ll get a chance to discuss it more once my thesis is done!

[Read more] -

TVMCon2023 Appearance: Transfer-Tuning

I was delighted to be accepted as a speaker at TVMCon2023, presenting my work on Transfer-Tuning. The whole event was great, with over 1000 registrants, and 60 speakers. My talk was recorded, and is available here on the OctoML YouTube channel.

-

UoG: Love Letter to your Thesis Competition

On a whim I made a submission to the University of Glasgow’s “Love Letter to your Thesis” competition. I managed to win in the “Most Inspiring” category!

subscribe via RSS